Cyngn (NASDAQ: CYN), which builds autonomous solutions for industrial enterprises, started in the NVIDIA Inception program years before going public, when it was a startup backed by leading venture capitalists like Benchmark and Andreessen Horowitz.

Now, Cyngn is putting the power of NVIDIA accelerated computing and CUDA software to use with the Cyngn DriveMod solution for industrial autonomous mobile robots (AMRs). DriveMod has already been outfitted onto material handling vehicles from established industrial vehicle manufacturers like BYD, Motrec, and Columbia Vehicle Group. Cyngn deploys DriveMod industrial vehicles to customers of all sizes, ranging from private manufacturing and distribution companies to Fortune 100 corporations like John Deere.

In recent years, Cyngn has announced sophisticated features that sit at the forefront of what autonomous vehicles can do.

High-Performance CV & AI for Perception

With several different sensor modalities on its DriveMod vehicles, Cyngn leans on the NVIDIA Jetson edge AI platform to process and extract intelligence from multiple sensor streams in real time. One under-served market need that Cyngn has addressed with proprietary computer vision solutions is detecting non-standard pallets with algorithms that can be flexibly applied to pallets of different sizes.

Let’s examine this interesting computer vision capability of the DriveMod Forklift:

Figure 1: DriveMod Forklift detecting attributes of a stack of wood finished goods

Figure 1 captures a great deal of insight for an autonomous forklift. Namely, the DriveMod system detects the number of units in a stack, the height of each unit in the stack, and the general attributes of the entire stack, like center and orientation relative to the forklift. Once the DriveMod Forklift approaches the stack, the “docking” perception system is activated for critical close-range information:

Figure 2: DriveMod Forklift detecting detailed attributes of pocket openings

In Figure 2 above:

- White plus signs: indicate the center and middle (Y and Z direction, respectively) of the entire pallet pocket region under a unit load

- Long horizontal green lines: indicate the top and bottom (Z direction) and width (Y direction) of the pallet pocket region under a unit load

- Short horizontal green lines: indicate the middle (Z-direction) of pallet pockets

The application-specific implementations above are unique to the type of AMR and the work that it needs to get done. In fact, Cyngn collaborated on this solution with Arauco, a world-leading wood and pulp products supplier to the furniture and construction industries, which resulted in Arauco placing a pre-order for 100 DriveMod Forklifts.

Meanwhile, a fundamental need for all autonomous systems is the ability to detect obstacles:

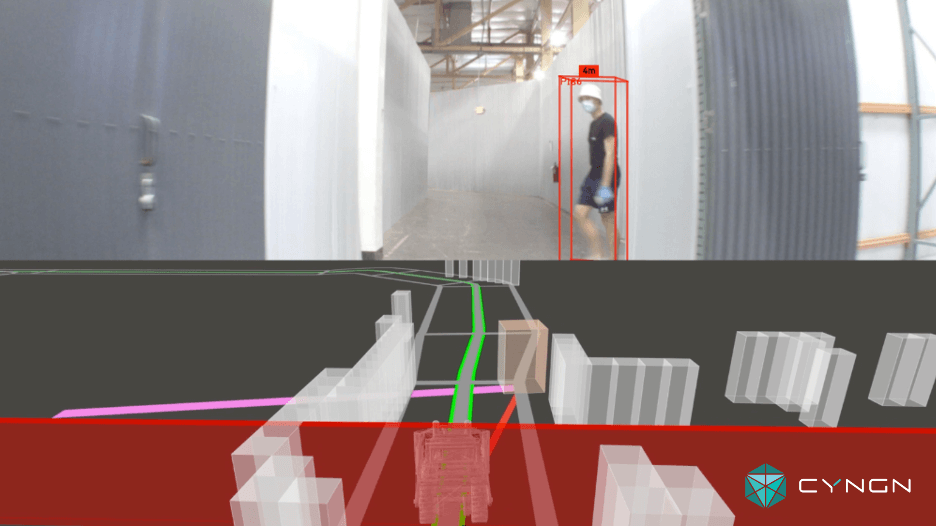

Figure 3: DriveMod AMR detecting an operator while identifying drivable area in a narrow corridor

Figure 3 shows the DriveMod perception system’s ability to detect and classify obstacles in the vicinity of the AMR. Furthermore, the contextual information of the scene, like the drivable area and planned path of the vehicle, result in the “red fence” that indicates the AMR is stopped for the obstacle that’s projected to enter its path. This AI-powered system offers 360-degree detection, and can be faster and more attentive than human operators (detections and decisions are measured in milliseconds).

Given the multitude of CV and AI models that run on the AMR in real time and the AI training required to produce accurate on-vehicle models, Cyngn uses NVIDIA’s software libraries and tools to optimize its AI solutions:

- Broadly, CUDA and the NVIDIA TensorRT software development kit are used to optimize AI inference in the AMR’s embedded computer and parallelize GPU tasks while also accelerating AI training.

- The NVIDIA Collective Communications Library, or NCCL, is used for scaled multi-cluster GPU training so that Cyngn engineers can continue improving DriveMod with big data repositories collected from the field.

High-Definition Site Mapping and Localization

For an AMR to get work done with the exciting sensing and perception capabilities outlined above, it must also know where the work needs to get done. The on-vehicle hardware that Cyngn uses to run the DriveMod autonomy stack is the same hardware that the company uses to map the environment prior to deployment. By simply manually driving DriveMod vehicles around the facility, Cyngn can produce rich 3D representations of the domain, then flip the switch to turn vehicles autonomous.

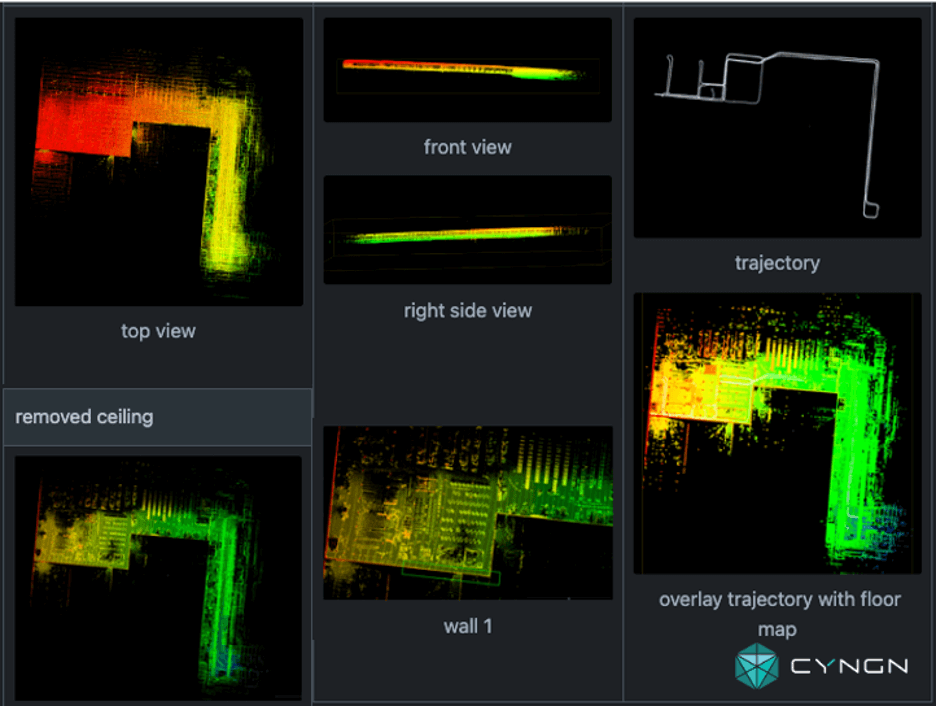

Figure 4: High-definition point-cloud maps of a manufacturing facility

Cyngn’s localization maps give DriveMod AMRs the foundation they need to navigate the environment with six degrees of freedom and centimeter-level accuracy. Best of all, DriveMod can be switched between autonomous and manual modes, so customers always have the option to hop onto a vehicle and get some ad hoc work done themselves.

NVIDIA’s powerful AI compute capabilities are uniquely positioned to help optimize point-cloud registration algorithms that localize the vehicles, with approaches like Iterative Closest Point (ICP) and Normal Distribution Transform (NDT) representing ripe areas for acceleration. NVIDIA CUDA software libraries are integrated with Cyngn’s toolkit for optimizing its mapping and localization solutions that must operate within a massive search space (think of a lidar that returns millions of points per second building a map of a 3-million square foot manufacturing facility).

The NVIDIA cuBLAS library provides accelerated linear algebra calculations and batched operations to speed up DriveMod’s feature-matching capabilities, while NVIDIA cuFilter provides intelligent point-cloud downsampling to reduce the search space and further improve localization efficiency. Translated to terms that matter to end users: this means that DriveMod AMRs can quickly figure out where they are—even in large sites—and be more robust to changes in dynamic environments.